Using tables as Queues

March 26, 2010A very common question asked on all programming forums is how to implement queues based on database tables. This is not a trivial question actually. Implementing a queue backed by a table is notoriously difficult, error prone and susceptible to deadlocks. Because queues are usually needed as a link between various processing stages in a workflow they operate in highly concurrent environments where multiple processes enqueue rows into the table while multiple processes attempt to dequeue these rows. This concurrency creates correctness, scalability and performance challenges.

But since SQL Server 2005 introduced the OUTPUT clause, using tables as queues is no longer a hard problem. This fact is called out in the OUTPUT Clause topic in BOL:

You can use OUTPUT in applications that use tables as queues, or to hold intermediate result sets. That is, the application is constantly adding or removing rows from the table… Other semantics may also be implemented, such as using a table to implement a stack.

The reason why OUTPUT clause is critical is that it offers an atomic destructive read operation that allows us to remove the dequeued row and return it to the caller, in one single statement.

Heap Queues

The simplest queue is a heap: producers can equeue into the heap and consumers can dequeue, but order of operations is not important: the consumers can dequeue any row, as long as it is unlocked.

create table HeapQueue (

Payload varbinary(max));

go

create procedure usp_enqueueHeap

@payload varbinary(max)

as

set nocount on;

insert into HeapQueue (Payload) values (@Payload);

go

create procedure usp_dequeueHeap

as

set nocount on;

delete top(1) from HeapQueue with (rowlock, readpast)

output deleted.payload;

go

A heap queue can satisfy most producer-consumer patterns. It scales well and is very simple to implement and understand. Notice the (rowlock, readpast) hints on the delete operation, they allow for concurrent consumers to dequeue rows from the table without blocking each other. A heap queue makes no order guarantees.

FIFO Queues

When he queueing and dequeuing operations have to support a certain order two changes have to be made:

- The table must be organized as a clustered index ordered by a key that preserves the desired dequeue order.

- The dequeue operation must contain an ORDER BY clause to guarantee the order.

create table FifoQueue (

Id bigint not null identity(1,1),

Payload varbinary(max));

go

create clustered index cdxFifoQueue on FifoQueue (Id);

go

create procedure usp_enqueueFifo

@payload varbinary(max)

as

set nocount on;

insert into FifoQueue (Payload) values (@Payload);

go

create procedure usp_dequeueFifo

as

set nocount on;

with cte as (

select top(1) Payload

from FifoQueue with (rowlock, readpast)

order by Id)

delete from cte

output deleted.Payload;

go

By adding the IDENTITY column to our queue and making it the clustered index, we can dequeue in the order inserted. The enqueue operation is identical with our Heap Queue, but the dequeue is slightly changed, as the requirement to dequeue in the order inserted means that we have to specify an ORDER BY. Since the DELETE statement does not support ORDER BY, we use a Common Table Expression to select the row to be dequeued, then delete this row and return the payload in the OUTPUT clause. Isn’t this the same as doing a SELECT followed by a DELETE, and hence exposed to the traditional correctness problems with table backed queues? Technically, it is. But this is a SELECT followed by a DELETE that actually works for table based queues. Let me explain.

Because the query is actually an DELETE of a CTE, the query execution will occur as a DELETE, not as an SELECT followed by a DELETE, and also not as a SELECT executed in the context of the DELETE. The crucial part is that the SELECT part will aquire LCK_M_U update locks on the rows scanned. LCK_M_U is compatible with LCK_M_S shared locks, but is incompatible with another LCK_M_U. So two concurrent dequeue threads will not try to dequeue the same row. One will grab the first row free, the other thread will grab the next row.

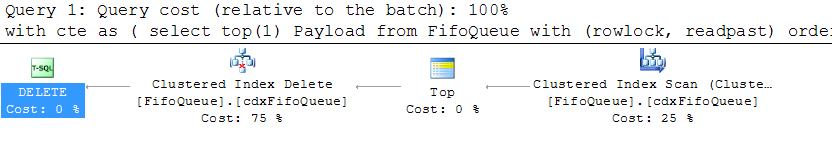

Is also worth looking at how a compact plan the usp_dequeueFifo has:

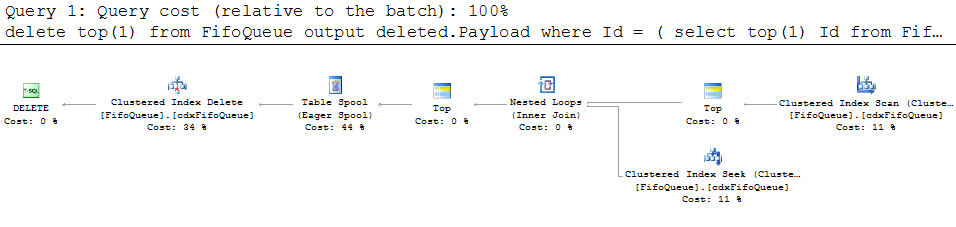

Compare this with the alternative of using a subquery to locate the row to be deleted:

delete top(1) from FifoQueue

output deleted.Payload

where Id = (

select top(1) Id

from FifoQueue with (rowlock, updlock, readpast)

order by Id)

Strict Ordering and Concurrency

Strict FIFO ordering in a database world would have to take into account transactions. If transaction T1 dequeues row A, transaction T2 dequeues the next row B and then T1 rolls back and T2 commits, the row B was processed out of order. So any dequeue operation would have to wait for the previous dequeue to committ before proceeding. While this is correct, is also highly inefficient, as it means that all transactions must serialize access to the queue. Many applications accept the processing to occur out of order for the sake of achieving a reasonable scalability and performance.

If strict FIFO order is required then you have to remove the readpast hint from the usp_dequeueFifo procedure. When this is done, only one transaction can dequeue rows from the queue at a time. All other transaction will have to wait until the first one commits. This is not an implementation artifact, it is a fundamental requirement derived from the ACID properties of transactions.

If a lax FIFO order is acceptable, then the readpast hint will ensure that multiple transactions can dequeue rows concurrently. However, the strict FIFO order cannot be guaranteed in this case.

LIFO Stacks</h3>

A stack backed by a queue is also possible. The implementation and table structure is almost identical with the FIFO queue, with one difference: the ORDER BY clause has a DESC.

with cte as (

select top(1) Payload

from FifoQueue with (rowlock, readpast)

order by Id DESC)

delete from cte

output deleted.Payload;

Because all operations (enqueue and dequeue) occur on the same rows, stacks implemented as tables tend to create a very hot spot on the page which currently contains these rows. Because all row insert, delete and update operations need to lock the page latch exclusively and stacks operate on the rows grouped at one end of the table, the result is high page latch contention on this page. Queues have the same problem, but to a lesser extent as the operations are spread in inserts at one end of the table and deletes at the other end, so the same number of operations is split into two hot spots instead of a single one like in stacks case.

Pending Queues

Another category of queues are pending queues. Items are inserted with a due date, and the dequeue operation returns rows that are due at dequeue time. This type of queues is common in scheduling systems.

create table PendingQueue (

DueTime datetime not null,

Payload varbinary(max));

create clustered index cdxPendingQueue on PendingQueue (DueTime);

go

create procedure usp_enqueuePending

@dueTime datetime,

@payload varbinary(max)

as

set nocount on;

insert into PendingQueue (DueTime, Payload)

values (@dueTime, @payload);

go

create procedure usp_dequeuePending

as

set nocount on;

declare @now datetime;

set @now = getutcdate();

with cte as (

select top(1)

Payload

from PendingQueue with (rowlock, readpast)

where DueTime < @now

order by DueTime)

delete from cte

output deleted.Payload;

go

I choose to use UTC times for my example, and I highly recommend you do the same for your applications. Not only this eliminates the problem of having to deal with timezones, but also your pending operations will behave correctly on the two times each year when summer time enters into effect or when it ends.

Why not use built-in Queues?

SQL Server has Queues, right? After all, what else are statements like CREATE QUEUE and DROP QUEUE refer to? Well, not really. SQL Server includes Service Broker, it’s true, and Service Broker uses these message stores that, for lack of a better term, where called Queues. But even during product development serious consideration was given to whether a different term should be used instead of ‘queue’ (eg. use ‘message store’). In the end the decision was made to use the term ‘queue’ given the industry familiarity with the term. But make no mistake, Service Broker ‘queues’ are not a generic queue storage for anyone to use. They are intended solely as a store of messages for Service Broker. If you try to use them as a generic queue, several shortcomings will become immediately apparent:

- Difficulty to enqueue. With Service Broker Queues you need to begin a conversation and send a message on it in order to enqueue something into a queue. What is a conversation you ask? My point exactly: the semantics exposed by Service Broker are those needed for it’s purpose, namely reliable messaging. You do not need to learn about services, contracts, message types, routes and remote service bindings just to enqueue a row into a queue.

- Fixed structure. Service Broker Queues have a specific table structure that cannot be altered in any fashion. You cannot add, alter or drop columns, you cannot change the indexes, you cannot change the clustered index. The Service Broker queues schema is designed for the RECEIVE verb and for the conversation group locking semantics of Service Broker, but that schema may not be what is optimal for your case.

- Lack of maintenance options. I blogged about this issue in my article Dealing with Large Queues. With Service Broker Queues you cannot use any of the table maintenance DDL, like rebuilding or reorganizing an index, you cannot use DMVs like sys.dm_db_index_physical_stats nor can you change the various table options via sp_tableoptions.

However Service Broker has one ace up its selves: Activation. Queue processing is often associated with event driven programming and the possibility to launch a procedure to handle incoming rows as they are enqueued in is always required with queues. Triggers don’t work as processing has to occur after the enqueue is committed. And scheduled SQL Agent jobs don’t adapt to the variable rates and spikes queue experience: if they are too aggressive they’ll burn CPU, but if they are too passive the latency increases even under no load. Is hard enough to tune it for a sweet spot under a constant load, but add a variable load with spikes and the task becomes impossible. Unfortunately there is no substitute for Activation, you have to handle the processing as a separate tasks that polls the queue for new rows. The only way to leverage activation, with it’s sweet mix of non-polling and self load balancing, is to use Service Broker Queues.