SQL Server table columns under the hood

October 20th, 2011You probably can easily answer a question like ‘What columns does this table have?’. Whether you use the SSMS object explorer, or sp_help, or you query sys.column, the answer is fairly easy to find. But what is I ask ‘What are the physical columns of this table?’. Huh? Is there any difference? Lets see.

At the logical layer tables have exactly the structure you declare it in your CREATE TABLE statement, and perhaps modifications from ALTER TABLE statements. This is the layer at which you can look into sys.columns and see the table structure, or look at the table in SSMS object explorer and so on and so forth. But there is also a lower layer, the physical layer of the storage engine where the table might have surprisingly different structure from what you expect.

Inspecting the physical table structure

To view the physical table structure you must use the undocumented system internals views: sys.system_internals_partitions and sys.system_internals_partition_columns:

select p.index_id, p.partition_number,

pc.leaf_null_bit,

coalesce(cx.name, c.name) as column_name,

pc.partition_column_id,

pc.max_inrow_length,

pc.max_length,

pc.key_ordinal,

pc.leaf_offset,

pc.is_nullable,

pc.is_dropped,

pc.is_uniqueifier,

pc.is_sparse,

pc.is_anti_matter

from sys.system_internals_partitions p

join sys.system_internals_partition_columns pc

on p.partition_id = pc.partition_id

left join sys.index_columns ic

on p.object_id = ic.object_id

and ic.index_id = p.index_id

and ic.index_column_id = pc.partition_column_id

left join sys.columns c

on p.object_id = c.object_id

and ic.column_id = c.column_id

left join sys.columns cx

on p.object_id = cx.object_id

and p.index_id in (0,1)

and pc.partition_column_id = cx.column_id

where p.object_id = object_id('...')

order by index_id, partition_number;

Lets inspect some simple table structures:

create table users (

user_id int not null identity(1,1),

first_name varchar(100) null,

last_name varchar(100) null,

birth_date datetime null);

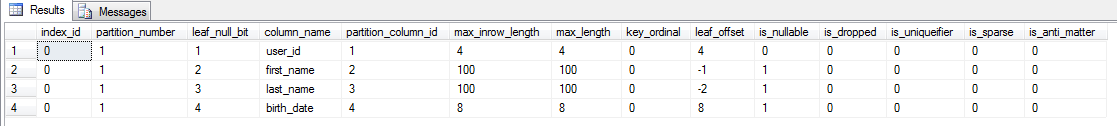

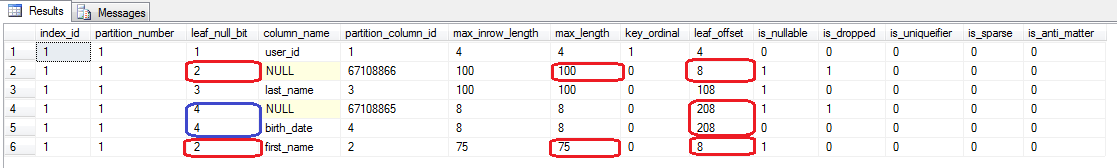

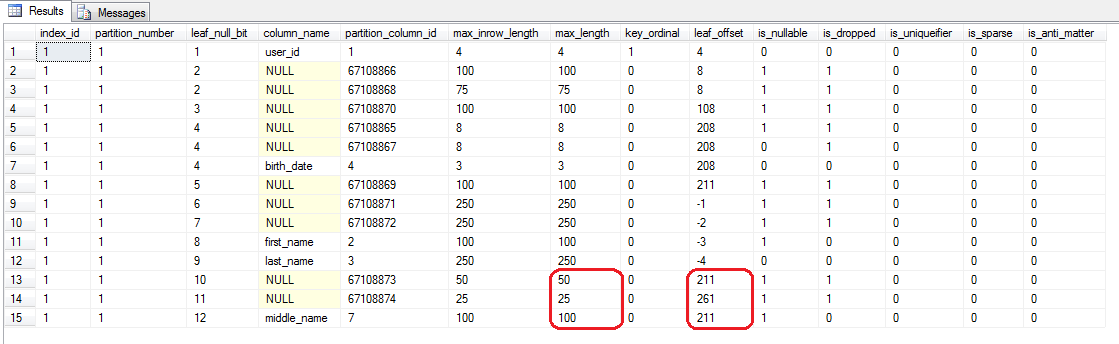

Running our query after, of course, we specify object_id('users'):

We can see that the physical structure is very much as we expected: the physical rowset has 4 physical columns, of the expected types and sizes. One thing to notice is that, although the column order is the one we specified, the columns are layout on disk in a different order: the user_id is stored in row at offset 4, followed by the birth_date at offset 8 and then by the two variable length columns (first_name and last_name) that have negative offsets, an indication that they reside in the variable size portion of the row. This should be of no surprise as we know that the row format places all fixed length columns first in the row, ahead of the variable length columns.

Adding a clustered index

Our table right now is a heap, we should make user_id a primary key, and lets check the table structure afterward:

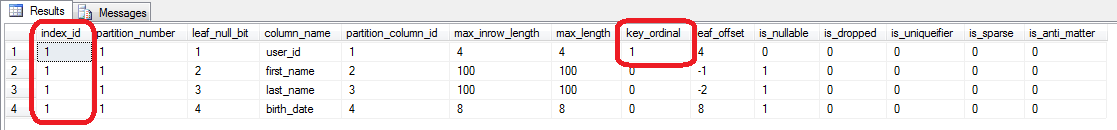

alter table users add constraint pk_users_user_id primary key (user_id);

As we can see, the table has changed from a heap into a clustered index (index_id changed from 0 to 1) and the user_id column has become part of the key.

Non-Unique clustered indexes

Now lets say that we want a different clustered index, perhaps by birth_date (maybe our application requires frequent range scans on this field). We would change the primary key into a non-clustered primary key (remember, the primary key and the clustered index do not have to be the same key) and add a clustered index by the birth_date column:

alter table users drop constraint pk_users_user_id;

create clustered index cdx_users on users (birth_date);

alter table users add constraint

pk_users_user_id primary key nonclustered (user_id);

Several changes right there:

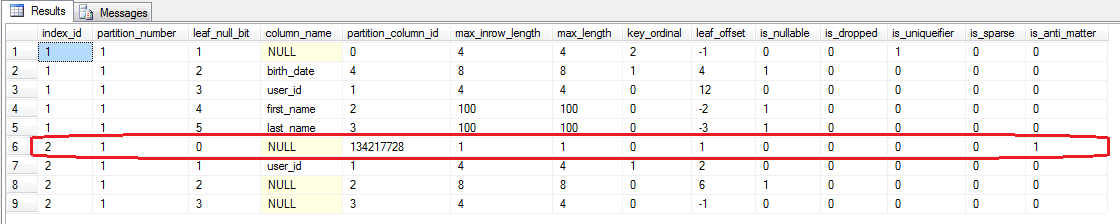

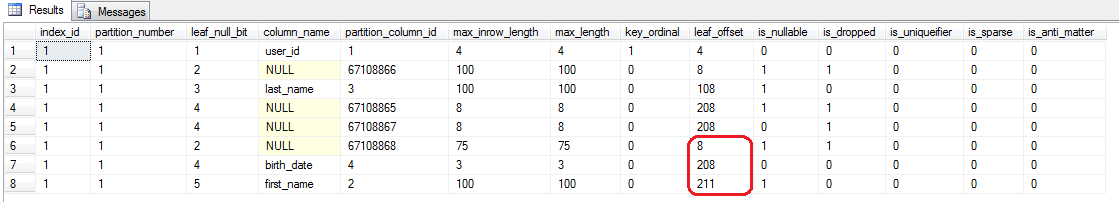

- A new index has appeared (index_id 2), which was expected, this is the non-clustered index that now enforces the primary key constraint on column user_id.

- The table has a new physical column, with partition_column_id 0. This new column has no name, because it is not visible in the logical table representation (you cannot select it, nor update it). This is an uniqueifier column (is_uniqueifier is 1) because we did not specify that our clustered index in unique:

If the clustered index is not created with the UNIQUE property, the Database Engine automatically adds a 4-byte uniqueifier column to the table. When it is required, the Database Engine automatically adds a uniqueifier value to a row to make each key unique. This column and its values are used internally and cannot be seen or accessed by users.

Klaus Aschenbrenner has a good series of articles that explain uniqueifier columns in more detail.

- The non-clustered index that enforces the primary key constraint has 3 physical columns: user_id and two unnamed ones. This is because each row in the non-clustered index contains the corresponding clustered index row key values, in our case the birth_date column and the uniqueifier column.

Online operations and anti-matter columns

Is the uniqueifier column the only hidden column in a table? No. Lets rebuild our non-clustered index, making sure we specify it to be an online operation:

alter index pk_users_user_id on users rebuild with (online=on);

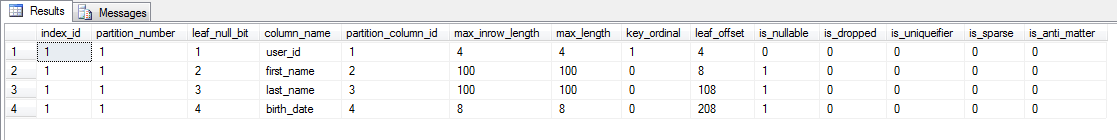

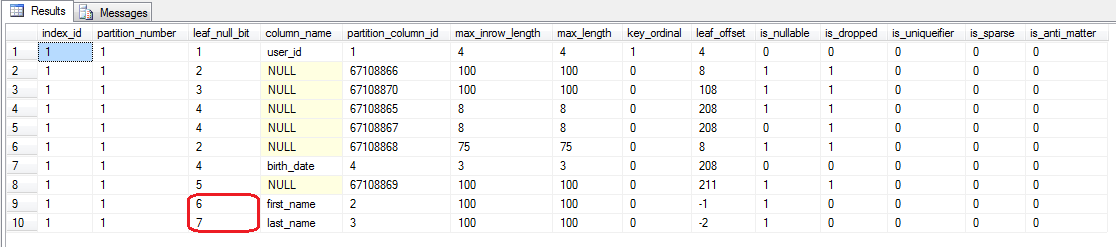

Notice how the non-clustered index now has one more column, a new column that has the is_antimatter value 1. As you probably guess, this is an anti-matter column. For an in-depth explanation of what is the purpose of the anti-matter column I recommend reading Online Indexing Operations in SQL Server 2005:

During the build phase, rows in the new “in-build” index may be in an intermediate state called antimatter. This mechanism allows concurrent DELETE statements to leave a trace for the index builder transaction to avoid inserting deleted rows. At the end of the index build operation all antimatter rows should be cleared.

Note that even after the online operation finishes and the antimatter rows are removed, the antimatter column will be part of the physical rowset structure.

There could exist more hidden columns in the table, for example if we enable change tracking:

alter table users ENABLE CHANGE_TRACKING;

Enabling change tracking on our table has added one more hidden column.

Table structure changes

Next lets look at some table structure modifying operations: adding, dropping and modifying columns. Were going to start anew with a fresh table for our examples:

create table users (

user_id int not null identity(1,1) primary key,

first_name char(100) null,

last_name char(100) null,

birth_date datetime null);

go

Next lets modify some columns: we want to make the birth_date not nullable and reduce the length of the first_name to 75:

alter table users alter column birth_date datetime not null;

alter table users alter column first_name char(75);

go

Notice how the two alter operations ended up in adding a new column each, and dropping the old column. But also note how the null bit and the leaf_offset values have stayed the same. This means that the column was added ‘in place’, replacing the dropped column. This is a metadata only operation that did not modify any record in the table, it simply changed how the data in the existing records is interpreted.

But now we figure out the 75 characters length is wrong and we want to change it back to 100. Also, the birth_date column probably doesn’t need hours, so we can change it to a date type:

alter table users alter column birth_date date not null;

alter table users alter column first_name char(100);

go

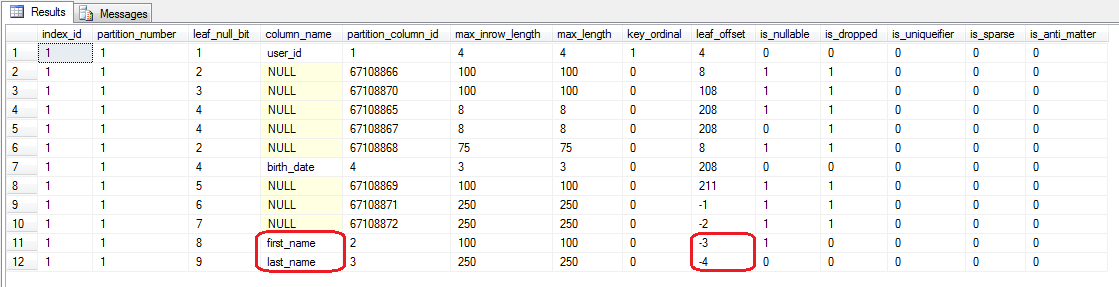

The birth_date column has changed type and now it requires only 3 bytes, but the change occurred in-place, just as the nullability change we did before: it remains at the same offset in the record and it has the same null bit. However, the first_name column was moved from offset 8 to offset 211, and the null bit was changed from 4 to 5. Because the first_name column has increased in size it cannot occupy the same space as before in the record and the record has effectively increased to accommodate the new first_name column. This happened despite the fact that the first_name column was originally of size 100 so in theory it could reclaim the old space it used in the record, but the is simply a too corner case for the storage engine to consider.

By now we figure that the fixed length 100 for the first_name and last_name columns was a poor choice, so we would like to change them to more appropriate variable length columns:

alter table users alter column first_name varchar(100);

alter table users alter column last_name varchar(100);

go

The type change from fixed length column to variable length column cannot be done in-place, so the first_name and last_name columns get new null bit values.They also have negative leaf_offset values, which is typical for variable length columns as they don’t occupy fixed positions in the record.

Next lets change the length of the variable columns:

alter table users alter column first_name varchar(250);

alter table users alter column last_name varchar(250);

go

For this change the column length was modified without dropping the column and adding a new one. An increase of size for a variable length columns is one of the operations that is really altering the physical column, not dropping the column and adding a new one to replace it. However, a decrease in size, or a nullability change, does again drop and add the column, as we can quickly check now:

alter table users alter column first_name varchar(100);

alter table users alter column last_name varchar(250) not null;

go

Finally, lets say we tried to add two more fixed length columns, but we we undecided on the name and length so we added a couple of columns, then deleted them and added again a new one:

alter table users add mid_name char(50);

alter table users add surname char(25);

alter table users drop column mid_name;

alter table users drop column surname;

alter table users add middle_name char(100);

goThis case is interesting because it shows how adding a new fixed column can reuse the space left in the row by dropped fixed columns, but only if the dropped columns are the last fixed columns. In our table the columns mid_name and surname where originally added at offset 211 and 261 respectively and their length added up to 75 bytes. After we dropped them, the middle_name column we added is placed at offset 211, thus reusing the space formerly occupied by the dropped columns. This happens even though the length of the newly added column is 100 bytes, bigger than the 75 bytes occupied by the dropped columns before.

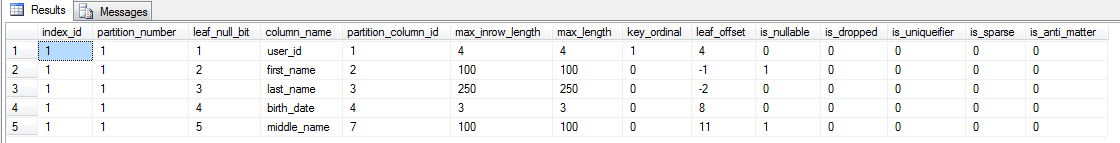

By now, our 5 column table has actually 15 columns in the physical storage format, 10 dropped columns and 5 usable columns. The table uses 412 bytes of fixed space for the 3 fixed length columns that have a total length of only 112 bytes. The variable length columns that now store the first_name and last_name are stored in the record after these 412 reserved bytes for fixed columns that are now dropped. Since the records always consume all the reserved fixed size, this is quite wasteful. How do we reclaim it? Rebuild the table:

alter table users rebuild;

go

As you can see the rebuild operation got rid off the dropped columns and now the physical storage layout is compact, aligned with the logical layout. So whenever doing changes to a table structure remember that at the storage layer most changes are cumulative, they are most times implemented by dropping a column and adding a new column back, with the new type/length/nullability. Whenever possible the a modified column reuses the space in the record and newly added columns may reuse space previously used by dropped columns.

Partitioned Tables

When partitioning is taken into account another dimension of the physical table structure is revealed. To start, lets consider a typical partitioned table:

create partition function pf (date) as range for values ('20111001');

go

create partition scheme ps as partition pf all to ([PRIMARY]);

go

create table sales (

sale_date date not null,

item_id int not null,

store_id int not null,

price money not null)

on ps(sale_date);

go

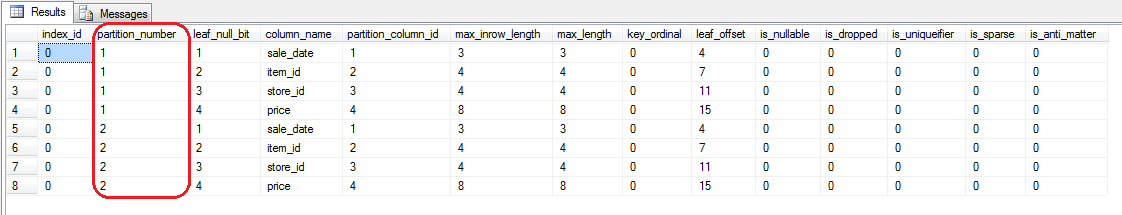

As we know, the partitioned tables are, from a physical point of view, a collection of rowsets that belong to the same logical object (the ‘table’). Looking under the hood shows that our table has two rowsets, and they have identical column structure. Typically new partitions are added by the ETL process that uploads data into a staging table that gets switched in using a fast partition switch operation:

create table sales_staging (

sale_date date not null,

item_id int not null,

store_id int not null,

price numeric(10,2) not null,

constraint check_partition_range

check (sale_date = '20111002'))

on [PRIMARY];

alter partition scheme ps next used [PRIMARY];

alter partition function pf() split range ('20111002');

go

alter table sales_staging switch to sales partition $PARTITION.PF('20111002');

go

Msg 4944, Level 16, State 1, Line 2

ALTER TABLE SWITCH statement failed because column 'price' has data type numeric(10,2) in source table 'test.dbo.sales_staging' which is different from its type money in target table 'test.dbo.sales'.

OK, so we made a mistake in the staging table, so lets correct it, then try to switch it in again:

alter table sales_staging alter column price money not null;

alter table sales_staging switch to sales partition $PARTITION.PF('20111002');

go

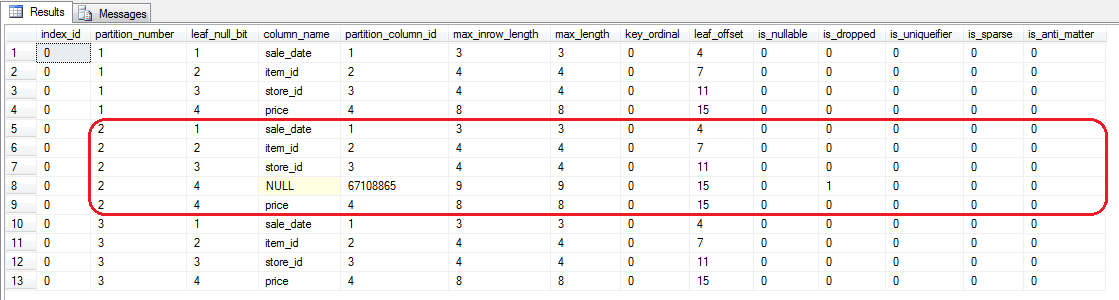

We see that the partition switch operation has switched in the rowset of the staging table into the second partition of the sales table. But since the staging table had an operation that modified a column type, which in effect has dropped the numeric price columns and added a new price column of the appropriate money type, the second rowset of the partitioned table now has 5 columns, including a dropped one. The partition switch operation brings into the partitioned table the staging table as is, dropped columns and all. What that means is that partitions of the partitioned table can have, under the hood, a completely different physical structure from one another. Normally this should be of little concern and just a courisity to woe the newbies at DBA cocktail parties, but this problem has a darker side, known as KB2504090:

This issue occurs because the accessor that SQL Server uses to insert data into different partitions recognizes the metadata changes incorrectly. When data is inserted into the new partition that is created after a column is dropped, the number of the maximum nullable columns in the new partition may be one less than the number of the maximum nullable columns in the old partition.

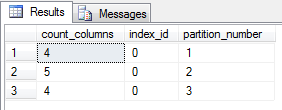

If you ever find your partitioned tables not to have an uniform internal structure across all partitions, I recommend you rebuild the partition that is different. This simple query can be used to look at the number of physical columns in each partition of a table:

select count(*) as count_columns,

index_id,

partition_number

from sys.system_internals_partitions p

join sys.system_internals_partition_columns pc

on p.partition_id = pc.partition_id

where p.object_id = object_id('sales')

group by index_id, partition_number;

go

This query shows that partitions 1 and 3 have 4 physical columns, while partition 2 has 5. We can rebuild partition 2:

alter table sales rebuild partition = 2;

go

With this operation we have rebuild the partition number 2 from scratch and removed all dropped columns in the process.

Note that my query above would only detect differences in the number of physical columns, but two partitions can still have a very different physical layout even if they have the same number of physical columns, eg. one can have physical column number 9 dropped and physical column number 10 used, while the other can have the opposite. Use your judgement when looking at the internal physical column layout to understand if they are truly the same.